DynamoDB Step By Step Process

What is DynamoDB?

performance with seamless scalability. Amazon DynamoDB enables customers

to offload the

administrative burdens of operating and scaling distributed

databases to AWS, so they don’t have

to worry about hardware provisioning,

setup and configuration, throughput capacity planning,

replication, software

patching, or cluster scaling.

management of the database software

and the provisioning of hardware needed to run it.

Customers can deploy a non-relational

database in a matter of minutes. DynamoDB automatically

scales throughput

capacity to meet workload demands and partitions and re-partitions your

data as

your table size grows. In addition, Amazon DynamoDB synchronously replicates

data a

cross three facilities in an AWS Region, giving you high availability and

data durability.

availability and data

durability. Read consistency represents the manner and timing in which the

successful write or update of a data item is reflected in a subsequent read

operation of that same

item. Amazon DynamoDB exposes logic that enables you to

specify the consistency

characteristics you desire for each read request within

your application.

eventually consistent or strongly

consistent:

throughput. However, an

eventually consistent read might not reflect the results of a recently

completed write. Consistency across all copies of data is usually reached

within a second.

Repeating a read after a short time should return the updated

data.

gives you the flexibility and

control to request a strongly consistent read if your application,

or an

element of your application, requires it. A strongly consistent read returns a

result that

reflects all writes that received a successful response prior to

the read.

attribute in a row using a

single API call. Similarly, you can atomically add or remove to sets, lists,

or

maps. View our

documentation for more information on atomic updates.

design goals of predictable

low-latency response times for storing and accessing data at any scale.

The

high I/O performance of SSDs also enables us to serve high-scale request

workloads cost efficiently,

and to pass this efficiency along in low request

pricing.

Q:

DynamoDB’s storage cost seems high. Is this a cost-effective service for my use

case?

total cost of a solution, not just

a single pricing dimension. The total cost of servicing a database

workload is

a function of the request traffic requirements and the amount of data stored.

Most

database workloads are characterized by a requirement for high I/O (high

reads/sec and writes/sec)

per GB stored. Amazon DynamoDB is built on SSD

drives, which raises the cost per GB stored,

relative to spinning media, but it

also allows us to offer very low request costs. Based on what

we see in typical

database workloads, we believe that the total bill for using the SSD-based

DynamoDB service will usually be lower than the cost of using a typical

spinning media-based

relational or non-relational database. If you have a use

case that involves storing a large amount

of data that you rarely access, then

DynamoDB may not be right for you. We recommend that you

use S3 for such use

cases.

data item across multiple

facilities within an AWS Region.

requirements increase. If you need fast,

predictable performance at any scale then DynamoDB

may be the right choice for

you.

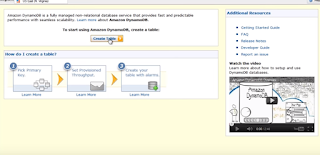

Q: How do I get started with Amazon DynamoDB?

Click “Sign Up” to get started with Amazon

DynamoDB today. From there, you can begin

interacting with Amazon DynamoDB

using either the AWS Management Console or

Amazon

DynamoDB APIs. If you are using the AWS Management Console, you can

create a table with

Amazon DynamoDB and begin exploring with just a few clicks.

The primary key is the only required

attribute for items in a table and it uniquely identifies

each item. You

specify the primary key when you create a table. In addition to that DynamoDB

provides flexible querying by letting you query on non-primary key attributes

using Global

Secondary Indexes and Local Secondary Indexes.

A primary key can either be a single-attribute

partition key or a composite partition-sort key.

A single attribute partition

primary key could be, for example, “UserID”. This would allow you

to quickly

read and write data for an item associated with a given user ID.

This multi-part key

maintains a hierarchy between the first and second element values.

For example,

a composite partition-sort key could be a combination of “UserID” (partition)

and “Timestamp” (sort). Holding the partition key element constant, you can

search across

the sort key element to retrieve items. This would allow you to

use the Query API to,

for example, retrieve all items for a single UserID

across a range of timestamps.

see the Secondary Indexes section in FAQ.

use the PutItem or

BatchWriteItem APIs to insert items. Then you can use the GetItem,

Batch

GetItem, or, if composite primary keys are enabled and in use in your

table, the Query API to

retrieve the item(s) you added to the table.

completed on an item. To

perform a conditional operation, you can define a ConditionExpression

that is

constructed from the following:

You can construct a free-form conditional

expression that combines multiple conditional

clauses, including nested

clauses. Conditional operations allow users to implement optimistic

concurrency

control systems on DynamoDB. For more information on conditional

operations,

please

see our documentation.

of primary keys on a table

using the KeyConditionExpression parameter.

documentation

page for more information on which expressions work

on partition and

partition-sort keys.

Amazon EC2?

an online game might start out

with only a few thousand users and a light database workload

consisting of 10

writes per second and 50 reads per second. However, if the game becomes

successful, it may rapidly grow to millions of users and generate tens (or even

hundreds) of

thousands of writes and reads per second. It may also create

terabytes or more of data per day.

Developing your applications against Amazon

DynamoDB enables you to start small and simply

dial-up your request capacity

for a table as your requirements scale, without incurring downtime.

You pay highly

cost-efficient rates for the request capacity you provision, and let Amazon

DynamoDB do the work over partitioning your data and traffic over sufficient

server capacity to

meet your needs. Amazon DynamoDB does the database

management and administration, and you

simply store and request your data.

Automatic replication and failover provides built-in fault

tolerance, high

availability, and data durability. Amazon DynamoDB gives you the peace of mind

that your database is fully managed and can grow with your application

requirements.

performance, and reliability, it

does not have all the functionality of a relational database. It does

not

support complex relational queries (e.g. joins) or complex transactions. If

your workload

requires this functionality, or you are looking for compatibility

with an existing relational engine,

you may wish to run a relational engine on

Amazon RDS or Amazon EC2. While relational database

engines provide robust

features and functionality, scaling a workload beyond a single relational

database instance is highly complex and requires significant time and

expertise. As such, if you

anticipate scaling requirements for your new

application and do not need relational features,

Amazon DynamoDB may be the

best choice for you.

administration.

Amazon DynamoDB focuses on providing seamless scalability and fast, predictable

performance. It runs on solid state disks (SSDs) for low-latency response

times, and there are no

limits on the request capacity or storage size for a

given table. This is because Amazon DynamoDB

automatically partitions your data

and workload over a sufficient number of servers to meet the

scale requirements

you provide. In contrast, a table in Amazon SimpleDB has a strict storage

limitation of 10 GB and is limited in the request capacity it can achieve

(typically under

25 writes/second); it is up to you to manage the partitioning

and re-partitioning of your data over

additional SimpleDB tables if you need

additional scale. While SimpleDB has scaling limitations,

it may be a good fit

for smaller workloads that require query flexibility. Amazon SimpleDB

automatically indexes all item attributes and thus supports query flexibility

at the cost of

performance and scale.

of non-relational database technology at Amazon.

and write access to items

ranging from 1 byte up to 400KB. Amazon S3 stores unstructured blobs

and suited

for storing large objects up to 5 TB. In order to optimize your costs across

AWS

services, large objects or infrequently accessed data sets should be stored

in Amazon S3, while

smaller data elements or file pointers (possibly to Amazon

S3 objects) are best saved in Amazon

DynamoDB.

by applications running on any operating system

(e.g. Linux, Windows, iOS, Android, Solaris, AIX,

HP-UX, etc.). We recommend

using the AWS SDKs to get started with DynamoDB. You can find

a list of the AWS

SDKs on our Developer Resources page.

If you have trouble installing or using

one of our SDKs, please let us know by

posting to the relevant AWS Forum.

Data Models and APIs

Table: A table is a collection of data items –

just like a table in a relational database is a collection

of rows. Each table

can have an infinite number of data items. Amazon DynamoDB is schema-less,

in

that the data items in a table need not have the same attributes or even the

same number of

attributes. Each table must have a primary key. The primary key

can be a single attribute key or

a “composite” attribute key that combines two

attributes. The attribute(s) you designate as a

primary key must exist for

every item as primary keys uniquely identify each item within the table.

Item: An Item is composed of a primary or

composite key and a flexible number of attributes.

There is no explicit

limitation on the number of attributes associated with an individual item,

but

the aggregate size of an item, including all the attribute names and attribute

values, cannot

exceed 400KB.

(e.g. “Color”) and a value or set of

values (e.g. “Red” or “Red, Yellow, Green”). Individual

attributes have no

explicit size limit, but the total value of an item (including all attribute

names and values) cannot exceed 400KB.

item, including attribute

names and attribute values, cannot exceed 400KB.

attributes). If an item

already exists in the specified table with the same primary key, the new

item

completely replaces the existing item. You can also use conditional operators

to replace

an item only if its attribute values match certain conditions, or to

insert a new item only if that

item doesn’t already exist.

single request, but not as a single transaction.

Supports batches of up to 25 items to Put or

Delete, with a maximum total

request size of 16 MB.

perform an update only if the item’s

attribute values match certain conditions.

to perform a delete an item only if

the item’s attribute values match certain conditions.

The GetItem operation

provides an eventually consistent read by default. If eventually consistent

reads are

not acceptable for your application, use ConsistentRead.

tables using their primary keys. A

single response has a size limit of 16 MB and returns a maximum

of 100 items.

Supports both strong and eventual consistency.

index key. You can narrow the

scope of the query on a table by using comparison operators or expressions.

You

can also filter the query results using filters on non-key attributes. Supports

both strong and eventual

consistency. A single response has a size limit of 1

MB.

You can limit the return set

by specifying filters against one or more attributes.

Q:

What is the consistency model of the Scan operation?

is eventually

consistent. However, you can modify the consistency model using the optional

Consistent

Read parameter in the Scan API call. Setting the ConsistentRead

parameter to true will enable you make

consistent reads from the Scan

operation. For more information, read the documentation for

the Scan

operation.

Scan API request

exceeds a 1 MB limit, the given request will terminate and fetched results will

be

returned along with a LastEvaluatedKey (to continue the scan in a subsequent

operation).

it stops the

operation and returns the matching values up to that point, and a

LastEvaluatedKey to apply

in a subsequent operation, so that you can pick up

where you left off.

divided by 4KB.

Scanning a table with consistent reads consumes twice the read capacity as a

scan with

eventually consistent reads.

supports collection

data types: Number Set, String Set, Binary Set, heterogeneous List and

heterogeneous

Map. DynamoDB also supports NULL values.

collections of objects that

are identified using a key and values that contain the actual content being

stored.

JSON, XML, and HTML.

types are a superset of the

data types supported by JSON. The document SDK will automatically map

JSON

documents onto native DynamoDB data types.

your DynamoDB tables,

including JSON documents. To view or edit data in your table, please log in to

the AWS Management Console, choose DynamoDB, select the table you want to view,

then click on

the “Explore Table” button.

For example, suppose you

stored a JSON document that contained the following information about

a person:

First Name, Last Name, Zip Code, and a list of all of their friends. First

Name, Last Name

and Zip code would be top-level JSON elements. You could create

an index to let you query based on

First Name, Last Name, or Zip Code. The list

of friends is not a top-level element, therefore you cannot

index the list of

friends. For more information on Global Secondary Indexing and its query

capabilities,

see the Secondary Indexes section in this FAQ.

to determine which

attributes should be retrieved from the table. Those attributes can include

scalars,

sets, or elements of a JSON document.

want to update.

JS and DynamoDB datatypes.

With this SDK, wrapping for requests will be handled for you; similarly

for

responses, datatypes will be unwrapped. For more information and downloading

the SDK see our

GitHub respository here.

Scalability,

Availability & Durability

your data set grows,

Amazon DynamoDB will automatically spread your data over sufficient machine

resources to meet your storage requirements.

have manually

provisioned for your table using the API or the AWS Management Console.

DynamoDB is

able to operate at massive scale and there is no theoretical limit

on the maximum throughput you can

achieve. DynamoDB automatically divides your

table across multiple partitions, where each partition is

an independent

parallel computation unit. DynamoDB can achieve increasingly high throughput

rates by

adding more partitions.

contact

Amazon through this online form.

up or down by changing the provisioned throughput?

available, whether

managed by Auto Scaling or manually.

three

facilities in an AWS Region to provide fault tolerance in the event of a server

failure or Availability

Zone outage.

facilities within an AWS Region.

Auto

Scaling

DynamoDB Auto Scaling is a fully managed feature that automatically scales up

or down provisioned

read and write capacity of a DynamoDB table or a global

secondary index, as application requests

increase or decrease.

Q. Why do I need to use Auto Scaling?

tables and reduces the

operational burden of continuously monitoring consumed throughput and adjusting

provisioned capacity manually. Auto Scaling helps ensure application

availability and reduces costs from

unused provisioned capacity.

Q. What application request patterns and workload are suited for

Auto Scaling?

Auto Scaling is ideally suited for request patterns that are uniform,

predictable, with sustained high and low

throughput usage that lasts for

several minutes to hours.

Q. How can I enable Auto Scaling for a DynamoDB table or global

secondary index?

From the DynamoDB console, when you create a new table, leave the 'Use default

settings' option

checked, to enable Auto Scaling and apply the same settings

for global secondary indexes for the table.

If you uncheck 'Use default

settings', you can either set provisioned capacity manually or enable Auto

Scaling with custom values for target utilization and minimum and maximum

capacity. For existing tables,

you can enable Auto Scaling or change existing

Auto Scaling settings by navigating to the 'Capacity' tab

and for indexes, you

can enable Auto Scaling from under the 'Indexes' tab. Auto Scaling can also be

programmatically managed using CLI or AWS SDK. Please refer to the DynamoDB developer guide to

learn more.

Q. What are settings I can configure for Auto Scaling?

There are three configurable settings for Auto Scaling: Target Utilization, the

percentage of actual

consumed throughput to total provisioned throughput, at a

point in time, the Minimum capacity to which

Auto Scaling can scale down to,

and Maximum capacity, to which the Auto Scaling can scale up to. The

default

value for Target Utilization is 70% (allowed range is 20% - 80% in one percent

increments),

minimum capacity is 1 unit and maximum capacity is the table limit

for your account in the region. Please

refer to the Limits in DynamoDB page

for region-level default table limits.

Q. Can I change the settings of an existing Auto Scaling policy?

Yes, you can change the settings of an existing Auto Scaling policy at any

time, by navigating to the

'Capacity' tab in the management console or

programmatically from the CLI or SDK using the

Auto Scaling APIs.

Q. How does Auto Scaling work?

When you create a new Auto Scaling policy for your DynamoDB table, Amazon

CloudWatch alarms are

created with thresholds for target utilization you

specify, calculated based on consumed and provisioned

capacity metrics published

to CloudWatch. If the table's actual utilization deviates from target for a

specific

length of time, the CloudWatch alarms activates Auto Scaling, which

evaluates your policy and in turn

makes an UpdateTable API request to DynamoDB

to dynamically increase (or decrease) the table's

provisioned throughput

capacity to bring the actual utilization closer to the target.

Q. Can I enable a single Auto Scaling policy across multiple tables

in multiple regions?

No, an Auto Scaling policy can only be set to a single table or a global

secondary indexes within a single

region.

Q. Can I force an Auto Scaling policy to scale up to maximum

capacity or scale down to minimum capacity

instantly?

No, scaling up instantly to maximum capacity or scaling down to minimum capacity

is not supported.

Instead, you can temporarily disable Auto Scaling, set

desired capacity you need manually for required

duration, and re-enable Auto

Scaling later.

You can monitor status of scaling actions triggered by Auto Scaling under the

'Capacity' tab in the

management console and from CloudWatch graphs under the

'Metrics' tab.

Q. How can I tell if a table has an active Auto Scaling policy or

not?

From the DynamoDB console, click on Tables in the left menu, to bring up the

list view of all DynamoDB

tables in your account. For tables with an active

Auto Scaling policy, the 'Auto Scaling' column shows

either READ_CAPACITY,

WRITE_CAPACITY or READ_AND_WRITE depending on whether Auto Scaling

is enabled

for read or write or both. Additionally, under the 'Table details' section of

the 'Overview' tab of a

table, the provisioned capacity label shows whether

Auto Scaling is enabled for read, write or both.

Q. What happens to the Auto Scaling policy when I delete a table or

global secondary index with an active

policy?

When you delete a table or global secondary index from the console, its Auto

Scaling policy and supporting

Cloud Watch alarms are also deleted.

Q. Are there any additional costs to use Auto Scaling?

No, there are no additional cost to using Auto Scaling, beyond what you already

pay for DynamoDB and

CloudWatch alarms. To learn about DynamoDB pricing, please

visit the DynamoDB pricing page.

Q. How does throughput capacity managed by Auto Scaling work with my

Reserved Capacity?

Auto Scaling works with reserved capacity in the same manner as manually

provisioned throughput

capacity does today. Reserved Capacity is applied to the

total provisioned capacity for the region you

purchased it in. Capacity

provisioned by Auto Scaling will consume the reserved capacity first, billed at

discounted prices, and any excess capacity will be charged at standard rates.

To limit total consumption

to the reserved capacity you purchased, distribute

maximum capacity limit across all tables with Auto

Scaling enabled, to be

cumulatively less than total reserved capacity amount you have purchased.

Global secondary

indexes are indexes that contain a partition or partition-and-sort keys that

can be

different from the table's primary key.

primary key attributes. This allows applications to quickly retrieve data by

specifying primary key values.

However, many applications might benefit from

having one or more secondary (or alternate) keys available

to allow efficient

access to data with attributes other than the primary key. To address this, you

can create

one or more secondary indexes on a table, and issue Query requests

against these indexes.

A local secondary index is "local" in the sense that every

partition of a local secondary index is scoped to a

table partition that has

the same partition key.

those on the table. A global secondary index is considered

"global" because queries on the index can span

all items in a table,

across all partitions.

appear in an index if they exist in the table on which the index is defined.

This makes queries against an

index very efficient, because the number of items

in the index will often be significantly less than the

number of items in the

table.

queries against any non-key attribute in the table.

primary key consists of UserId(partition) and GameTitle (sort).

Items have attributes named TopScore,

Timestamp, ZipCode,

and others. Upon table creation, DynamoDB provides an implicit index

(primary

index) on the primary key that can support efficient queries that return a

specific user’s top scores

for all games.

would be inefficient, and would require scanning through the

entire table. Instead, a global secondary

index with GameTitle as

the partition key element and TopScore as the sort key element would

enable

the application to rapidly retrieve top scores for a game.

has a partition element GameTitle. In the example below,

the GSI has no projected attributes, so it will just

return all items

(identified by primary key) that have an attribute matching the GameTitle you

are querying

on.

lot of different values. For example, you could create a DynamoDB

table with CustomerID as the primary

partition key for

the table and ZipCode as the partition key for a global

secondary index, since there are a

lot of zip codes and since you will probably

have a lot of customers. Using the primary key, you could

quickly get the

record for any customer. Using the global secondary index, you could

efficiently query

for all customers that live in a given zip code.

best practices documentation on uniform workloads.

indexes, see here. You can create a maximum of 5 global secondary

indexes per table.

You can download the local version of DynamoDB here.

index. When you create a secondary index, you define the

alternate key for the index, along with any other

attributes that you want to

be projected in the index. Amazon DynamoDB copies these attributes into the

index, along with the primary key attributes from the table. You can then query

the index just as you would

query a table.

For instance, a GSI on GameTitle could index all

items that track scores of users for every game. In this

example, this GSI can

be queried to return all users that have played the game "TicTacToe."

key value, whereas a GSI spans all partition key

values. Since items having the same partition key value

share the same

partition in DynamoDB, the "Local" Secondary Index only covers items

that are stored

together (on the same partition). Thus, the purpose of the LSI

is to query items that have the same partition

key value but different sort key

values. For example, consider a DynamoDB table that tracks Orders for

customers, where CustomerId is the partition key.

customer.

items of the table just like the primary key. For the table above, a GSI

on ProductId can be used to efficiently

find all orders of a

particular product. Note that in this case, no GSI sort key is specified, and

even though

there might be many orders with the same ProductId,

they will be stored as separate items in the GSI.

total size of all elements (tables and indexes) to 10 GB per

partition key value. GSIs do not enforce data

co-location, and have no such

restriction.

GSIs defined on the table are eventually consistent.

behavior for GSIs.

key element, with conditional filters on the GSI sort key element.

However, unlike a primary key of a

DynamoDB table, which must be

unique, a GSI key can be the same for multiple items. If multiple items

with

the same GSI key exist, they are tracked as separate GSI items, and a GSI query

will retrieve all of

them as individual items. Internally, DynamoDB will ensure

that the contents of the GSI are updated

appropriately as items are added,

removed or updated.

matching items’ primary keys. GSI’s consume storage for projected items

that exist in the source table.

This enables queries to be issued against the GSI

rather than the table, increasing query flexibility and

improving workload

distribution. Attributes that are part of an item in a table, but not part of

the GSI key,

primary key of the table, or projected attributes are thus not

returned on querying the GSI index.

Applications that need additional data from

the table after querying the GSI, can retrieve the primary key

from the GSI and

then use either the GetItem or BatchGetItem APIs to retrieve the desired attributes

from

the table. As GSI’s are eventually consistent, applications that use this

pattern have to accommodate item

deletion (from the table) in between the calls

to the GSI and GetItem/BatchItem.

changes

are made to the table. When an item (with GSI key attributes) is added to the

table, DynamoDB

updates the GSI asynchronously to add the new item. Similarly,

when an item is deleted from the table,

DynamoDB removes the item from the

impacted GSI.

has. The table's primary key can include just a partition key, or it may

include both a partition key and a

sort key.

updated synchronously. Under normal operating conditions, a write to a global

secondary index will

propagate in a fraction of a second. In unlikely failure

scenarios, longer delays may occur. Because of this,

your application logic

should be capable of handling GSI query results that are potentially

out-of-date.

Note that this is the same behavior exhibited by other DynamoDB

APIs that support eventually consistent

reads.

The partition key is UserId, and the primary sort key is GameTitle.

If the application adds an item denoting

a new top score for GameTitle "TicTacToe"

and UserId"GAMER123," and then subsequently queries the

GSI, it is possible that the new score will not be in the result of the query.

However, once the GSI

propagation has completed, the new item will start

appearing in such queries on the GSI.

for a new or existing table from the console, you can optionally choose

to apply the same settings to GSIs.

You can also provision different throughput

for tables and global secondary indexes manually.

table or other GSIs. Some scenarios that show this are given below:

to the table.

table.

provisioned throughput of the table.

the items. In this case, the GSI’s provisioned write capacity units should

be set at 50% of the table’s

provisioned write capacity units. Using a similar

approach, the read throughput of the GSI can be estimated.

Please see DynamoDB

GSI Documentation for more details.

to it. A write that adds or updates a GSI item will consume write capacity

units based on the size of the

update. The capacity consumed by the GSI write

is in addition to that needed for updating the item in the

table.

to a GSI, then the GSI will not consume any write capacity units. This

happens when an item without any

GSI key attributes is added to the DynamoDB

table, or an item is updated without changing any GSI key

or projected

attributes.

key and projected attributes and values, and an overhead of 100 bytes

for indexing purposes.

provisioned throughput can be exhausted. In such a scenario,

subsequent writes to the table will be

throttled. This can occur even if the

table has available write capacity units.

tables.

provision manually, while not required, You are charged for the aggregate

provisioned throughput for a table

and its GSIs by the hour. In addition, you

are charged for the data storage taken up by the GSI as well as

standard data

transfer (external) fees. If you would like to change your GSI’s provisioned

throughput

capacity, you can do so using the DynamoDB Console or the UpdateTable API or the PutScaling Policy API

for updating Auto Scaling policy

settings.

the GSI

to operate against. Note that a query can use only one GSI.

values and supports a subset of comparison operators. Because GSIs

are updated asynchronously, you

cannot use the ConsistentRead parameter with the query. Please

see here for details on using GSIs with

queries

and scans.

index with partition-sort key schema the ordering of the results for

the same partition key is based on the

sort key attribute.

console, first select the table for which you want to add a Global Secondary

Index and click the “Create

Index” button to add a new index. Follow the steps

in the index creation wizard and select “Create” when

done. You can also add or

delete a Global Secondary Index using the UpdateTable API call with the

Global

SecondaryIndexes parameter.You can learn more by reading our documentation page.

console, select the table for which you want to delete a Global Secondary

Index. Then, select the

“Indexes” tab under “Table Items” and click on the

“Delete” button next to delete the index. You can also

delete a Global

Secondary Index using the UpdateTable API call.You can learn more by reading

our

documentation page.

You can only add or delete one index per API call.

finished.

table. When you provision manually, while not required, it is highly

recommended that you provision

additional write throughput that is separate

from the throughput for the index. If you do not provision

additional write

throughput, the write throughput from the index will be consumed for adding the

new index.

This will affect the write performance of the index while the index

is being created as well as increase

the time to create the new index.

created?

once the process is complete.

creation process.

throughput for Global Secondary Index creation. The process of adding or

deleting an index could

vary from a few minutes to a few hours. For example,

let's assume that you have a 1GB table that

has 500 write capacity units

provisioned and you have provisioned 1000 additional write capacity units

for

the index and new index creation. If the new index includes all the attributes

in the table and the table

is using all the write capacity units, we expect the

index creation will take roughly 30 minutes.

will typically take less than 1 minute.

with the table. For an add index operation, while the index is being

created, the status of the index will be

“CREATING”. Once the creation of the

index is finished, the index state will change from “CREATING” to

“ACTIVE”. For

a delete index operation, when the request is complete, the deleted index will

cease to exist.

Q. Can I get a notification when the index

creation process for adding a Global Secondary Index is

complete?

completed. When you add an index through the console, you can

request a notification on the last step

before creating the index. When the

index creation is complete, DynamoDB will send an SNS notification

to your

email.

index is added.

does not have to contain any of the GSI keys. If a GSI key has

both partition and sort elements, and a table

item omits either of them,

then that item will not be indexed by the corresponding GSI. In such cases, a

GSI can be very useful in efficiently locating items that have an uncommon

attribute.

The attributes included in the GSI are those that are projected

by default such as the GSI’s key attribute(s)

and table’s primary key

attribute(s), and those that the user specified to be projected. For this

reason, a

GSI query will not return attributes of items that are part of the

table, but not included in the GSI. A GSI that

specifies all attributes as

projected attributes can be used to retrieve any table attributes. See here for

documentation on using GSIs for

queries.

local secondary index key. Set, list, and map types cannot be indexed.

assumes a relatively

random access pattern across all its keys. To get the most out of secondary

index

provisioned throughput, you should select a GSI partition key attribute

that has a large number of distinct

values, and a GSI sort key attribute that

is requested fairly uniformly, as randomly as possible.

the table and each GSI.

These include:

For more details on

metrics supported by DynamoDB tables and indexes see here.

like to scan. You must specify the index partition attribute name and

value. You can optionally specify a

condition against the index key sort

attribute.

the result set?

otherwise require

retrieving a large number of items and then filtering the results. It means

your applications

can rely on more flexible queries based on a wider range of

attributes.

that share the

same partition key), DynamoDB would have fetched all objects that share a

single partition

key, and filter the results accordingly. For instance,

consider an e-commerce application that stores

customer order data in a

DynamoDB table with partition-sort schema of customer id-order timestamp.

Without LSI, to find an answer to the question “Display all orders made by

Customer X with shipping date

in the past 30 days, sorted by shipping date”,

you had to use the Query API to retrieve all the objects

under

the partition key “X”, sort the results by shipment date and then filter

out older records.

“shipping date”

attribute and execute this query efficiently and just retieve only the

necessary items.

This significantly reduces the latency and cost of your queries

as you will retrieve only items that meet

your specific criteria. Moreover, it

also simplifies the programming model for your application as you no

longer

have to write customer logic to filter the results. We call this new secondary

index a ‘local’

secondary index because it is used along with

the partition key and hence allows you to search locally

within

a partition key bucket. So while previously you could only search using

the partition key and the

sort key, now you can also search using a

secondary index in place of the sort key, thus expanding the

number of

attributes that can be used for queries which can be conducted efficiently.

attributes include the

table partition and sort key, plus the alternate sort key you define. You

can also

redundantly store other data attributes in the local secondary index,

in order to access those other

attributes without having to access the table

itself.

the volume of data

you can store within a single partition key value. For more information,

see the FAQ

items below about item collections.

determines the

attributes that you will be able to retrieve with the most efficiency. When you

query a

local secondary index, Amazon DynamoDB can access any of the projected

attributes, with the same

performance characteristics as if those attributes

were in a table of their own. If you need to retrieve any

attributes that are

not projected, Amazon DynamoDB will automatically fetch those attributes from

the table.

index. At a

minimum, each index entry consists of: (1) the table partition key value,

(2) an attribute to

serve as the index sort key, and (3) the table sort key

value.

the index. You

can even choose to project all attributes into the index, in which case the

index replicates

the same data as the table itself, but the data is organized

by the alternate sort key you specify.

specify the

following two parameters:

index, so

they can be returned more quickly without fetching data from the primary index,

which contains

all the items of the table. Without projected attributes, local

secondary index contains only primary and

secondary index keys.

from a primary index, LSI

supports both strong and eventually consistent read options.

specified for that

LSI. DynamoDB’s flexible schema means that not all items will necessarily

contain all

attributes.

local secondary

indexes are sparse, they are efficient to support queries on attributes that

are uncommon.

an item that are

included only if the order is canceled (such as CanceledDateTime,

CanceledReason).

For queries related to canceled items, an local secondary

index on either of these attributes would be

efficient since the only items

referenced in the index would be those that had these attributes present.

like to query.

You must specify the index partition attribute name and value. You can

optionally specify a

condition against the index key sort attribute.

operation,

with a cost of additional read capacity units.

index.

use a partition-sort

composite key.

adding this

capability and will be releasing it in the future. When you create a table with

local secondary

index, you may decide to create local secondary index for

future use by defining a sort key element that is

currently not used. Since

local secondary index are sparse, this index costs nothing until you decide to

use it.

within the table. Each

index may also specifify that all non-key attributes from the primary index are

projected.

they are deleted if

you also decide to delete the entire table. We are working on adding this

capability and

will be releasing it in the future.

as part of the

table with which it is associated.

of data, with the

following differences:

mirrored to the

appropriate local secondary indexes. In these cases, write capacity will be

consumed for

the table itself, and additional write capacity will be consumed

for each relevant LSI.

consume extra units

of write capacity per 1KB of data.

attributes from the

primary index. This implicit GetItem request consumes one read capacity unit

per 4KB

of item data fetched.

index keys, for all

projected non-key attributes, plus 100 bytes per item reflected in the LSI.

index key. Set

types cannot be used.

table and all of

its local secondary indexes. Traditional partitioned (or sharded) relational

database systems

call these shards or partitions, referring to all database

items or rows stored under a partition key.

indexes. DynamoDB

stores each item collection within a single disk partition.

distinct partition key value, the sum of the item sizes in the table plus

the sum of the item sizes across all

of that table's local secondary indexes

must not exceed 10 GB.

that have one or

more local secondary indexes are affected.

secondary

indexes is not limited. The total size of an indexed table in Amazon DynamoDB

is effectively

unlimited, provided the total storage size (table and indexes)

for any one partition key value does not

exceed the 10 GB threshold.

allows the API

response to include an estimate of the relevant item collection’s size. This

estimate includes

lower and upper size estimate for the data in a particular

item collection, measured in gigabytes.

applications should examine

the API responses regarding item collection size, and log an error message

whenever an item collection exceeds a user-defined limit (8 GB, for example).

This would provide an early

warning system, letting you know that an item

collection is growing larger, but giving you enough time to

do something about

it.

increase the size

of existing items, for that particular partition key. Read and write

operations that shrink

the size of the item collection are still allowed. Other

item collections in the table are not affected.

10GB. Alternatively,

you can introduce new items under a new partition key value to work around

this

problem. If your table includes historical data that is infrequently

accessed, consider archiving the historical

data to Amazon S3, Amazon Glacier

or another data store.

to scan.

You must specify the index partition attribute name and value. You can

optionally specify a

condition against the index key sort attribute.

operation,

with a cost of additional read capacity units.

result set?

attribute.

Security

and Control

the table.

Specifically, the table owner can indicate who (caller) can

access which items or attributes of the

table and

perform what actions (read / write capability). FGAC is used

in concert with

AWS Identity and Access

Management (IAM), which manages the security credentials and the

associated

permissions.

application client

acting on behalf of an end user) wants to read or modify the table directly,

without a

middle-tier service. For instance, a developer of a mobile app

named Acme can use FGAC to track the top

score of every Acme user

in a DynamoDB table. FGAC allows the application client to modify only the top

score for the user that is currently running the application.

attributes in your

document. You cannot use FGAC to restrict access based on nested attributes. For

example, suppose you stored a JSON document that contained the following

information about a person:

ID, first name, last name, and a list of all of

their friends. You could use FGAC to restrict access based on

their ID, first

name, or last name, but not based on the list of friends.

onerous approaches.

Some of these are:

authorization. Such a solution increases the complexity of the system

architecture and can result in a higher

total cost of ownership (TCO).

different tables, they would be protected from one another. This could

potentially require a developer to

create millions of tables, thereby making

database management extremely painful.

this is the difficulty

in changing the token and handling its impact on the stored data. Here, the key

of the

items accessible by this client would contain the secret token.

Q:

How does DynamoDB FGAC work?

items in a

specific DynamoDB table. With this token, the end user application agent can

make requests to

DynamoDB directly. Upon receiving the request, the incoming

request’s credentials are first evaluated by

DynamoDB, which will use IAM to

authenticate the request and determine the capabilities allowed for the

user.

If the user’s request is not permitted, FGAC will prevent the data from being

accessed.

storage associated

with the DynamoDB table.

create an access policy, create an IAM role for your app (e.g. a role

named AcmeFacebookUsers for a

Facebook app_id of 34567), and assign your access

policy to the role. The trust policy of the role

determines which identity

providers are accepted (e.g. Login with Amazon, Facebook, or Google), and the

access policy describes which AWS resources can be accessed (e.g. a DynamoDB

table). Using the role,

your app can now to obtain temporary credentials for

DynamoDB by calling the AssumeRoleWithIdentity

Request API of the AWS Security

Token Service (STS).

retrieve non-projected attributes?

attributes that are not

projected into an index. You an restrict such potentially expensive “fetch”

operations

by limiting the permissions to only projected attributes, using the

"dynamodb:Attributes" context key.

privilege, and Allow

access to only specific attributes.

recommended for

the following reasons:

requests for every possible attribute name, until the user is

ultimately denied access.

might allow an access pattern that you

had previously intended to block.

Q:

How do I prevent users from adding invalid data to a table?

changed or read. Users

can add new items without those blocked attributes, and change any value of

any

attribute that is modifiable.

NotLike, and many

others. For additional details, please see the IAM

Policy Reference.

also compare your policy to those

listed in the Amazon DynamoDB Developer Guide to make sure you

are following a

recommended pattern. You can post policies to the AWS Forums to get thoughts

from the

DynamoDB community.

identity provider they logged in with?

directly using Facebook

credentials with STS, those temporary credentials only have information about

that user’s Facebook login, and not their Amazon login, or Google login. If you

want to internally store a

mapping of each of these logins to your own stable

identifier, you can run a service that the user contacts

to log in, and then

call STS and provide them with credentials scoped to whatever partition

key value you

come up with as their canonical user id.

item collection.

throughput consumed by operations.

a table when you did not intend to give them access. To prevent this, follow

the principle of least privilege

and only allow access to the tables and

actions that you intended to allow access to.

·

If you deny access to

specific attributes instead of whitelisting access to specific attributes, the

caller

can theoretically determine the names of the hidden attributes if “allow

all except for” logic. It is safer to

whitelist specific attribute names

instead.

Q:

Does Amazon DynamoDB support IAM permissions?

service integration.

Q:

I wish to perform security analysis or operational troubleshooting on my

DynamoDB tables. Can I get

a history of all DynamoDB API calls made on my account?

to you. The AWS API call history

produced by AWS CloudTrail enables security analysis, resource change

tracking,

and compliance auditing. Details about DynamoDB support for CloudTrail can be

found here.

Learn more about CloudTrail at the AWS CloudTrail detail page,

and turn it on via CloudTrail's

AWS Management Console home page.

Pricing

billed by the

hour for that throughput capacity if you exceed the free tier.

requests to your

table. If you would like to change your table’s provisioned throughput

capacity, you can

do so using the AWS Management Console, the UpdateTable API

or the PutScalingPolicy API for Auto

Scaling..

transfer fees

pricing. To view

prices for other regions, visit our pricing page.

throughput, you

would be charged:

units of write

capacity and 50,000 units of read capacity, your bill would then change to:

based on your

desired target utilization and minimum and maximum capacity limits, or lets you

specify the

request throughput you want your table to be able to achieve

manually. Behind the scenes, the service

handles the provisioning of resources

to achieve the requested throughput rate. Rather than asking you to

think about

instances, hardware, memory, and other factors that could affect your

throughput rate, we

simply ask you to provision the throughput level you want

to achieve. This is the provisioned throughput

model of service.

settings for

target utilization, minimum and maximum capacity; or you can specify your

required read and

write capacity needs manually; and Amazon DynamoDB

automatically partitions and reserves the

appropriate amount of resources to

meet your throughput requirements.

based on

the partition key element of the primary key. While allocating capacity

resources, Amazon

DynamoDB assumes a relatively random access pattern across

all primary keys. You should set up your

data model so that your requests

result in a fairly even distribution of traffic across primary keys. If a table

has a very small number of heavily-accessed partition key elements,

possibly even a single very heavily-

used partition key element, traffic is

concentrated on a small number of partitions – potentially only one

partition.

If the workload is heavily unbalanced, meaning disproportionately focused on

one or a few

partitions, the operations will not achieve the overall provisioned

throughput level. To get the most out of

Amazon DynamoDB throughput, build

tables where the partition key element has a large number of

distinct

values, and values are requested fairly uniformly, as randomly as possible. An

example of a good

primary key is CustomerID if the application has many

customers and requests made to various customer

records tend to be more or less

uniform. An example of a heavily skewed primary key is “Product Category

Name”

where certain product categories are more popular than the rest.

Capacity enables you

to perform one write per second for items of up to 1KB in size. Similarly, a

unit of

Read Capacity enables you to perform one strongly consistent read per

second (or two eventually

consistent reads per second) of items of up to 4KB in

size. Larger items will require more capacity. You

can calculate the number of

units of read and write capacity you need by estimating the number of reads

or

writes you need to do per second and multiplying by the size of your items

(rounded up to the nearest KB).

read/second and

each unit of Write Capacity will give you 1 write/second of capacity. For

example, if your

items are 512 bytes and you need to read 100 items per second

from your table, then you need to

provision 100 units of Read Capacity.

and Write

Capacity that you need. For example, if your items are 4.5KB and you want to do

100 strongly

consistent reads/second, then you would need to provision 100

(read per second) x 2 (number of 4KB

blocks required to store 4.5KB) = 200

units of Read Capacity.

per second, not the

number of API calls. For example, if you need to read 500 items per second from

your

table, and if your items are 4KB or less, then you need 500 units of Read

Capacity. It doesn’t matter if you

do 500 individual GetItem calls or 50

BatchGetItem calls that each return 10 items.

up your data model so

that your requests result in a fairly even distribution of traffic across

primary keys.

If you have a highly uneven or skewed access pattern, you may not

be able to achieve your level of

provisioned throughput.

based on

the partition key element of the primary key. The provisioned throughput

associated with a table

is also divided among the partitions; each partition's

throughput is managed independently based on the

quota allotted to it. There is

no sharing of provisioned throughput across partitions. Consequently, a table

in Amazon DynamoDB is best able to meet the provisioned throughput levels if

the workload is spread

fairly uniformly across the partition key values. Distributing

requests across partition key values distributes

the requests across

partitions, which helps achieve your full provisioned throughput level.

throughput level, you

may be able to meet your throughput needs by increasing your provisioned

throughput level further, which will give more throughput to each partition.

However, it is recommended

that you considering modifying your request pattern

or your data model in order to achieve a relatively

random access pattern

across primary keys.

write capacity units

or 10,000 read capacity units for an individual table, you must first

contact

Amazon through this online form. If you wish to provision more than

20,000 write capacity units

or 20,000 read capacity units from a single

subscriber account you must first contact

us using the form

described above.

both Auto Scaling

and manual throughput provisioning..

free tier

applies at the account level, not the table level. In other words, if you add

up the provisioned

capacity of all your tables, and if the total capacity is no

more than 25 units of write capacity and 25 units

of read capacity, your

provisioned capacity would fall into the free tier.

API. For example,

you could increase your table’s provisioned write capacity from 1 write

capacity unit to

10,000 write capacity units with a single API call. Your

account is still subject to table-level and

account-level limits on capacity,

as described in our documentation page.

If you need to raise your

provisioned capacity limits, you can visit our Support Center, click “Open a new case”, and file a

service

limit increase request.

rate you asked

for. You are billed at an hourly rate for as long as your table holds on to

those resources.

For a complete list of prices with examples, see the DynamoDB pricing page.

make the change in the

management console, or you can use the UpdateTable API call. In either case,

Amazon DynamoDB will remain available while your provisioned throughput level

increases or decreases.

Q:

How often can I change my provisioned throughput?

any time per day. A day is

defined according to the GMT time zone. Additionally, if there was no decrease

in the past four hours, an additional dial down is allowed, effectively

bringing maximum number of

decreases in a day to 9 (4 decreases in the first 4

hours, and 1 decrease for each of the subsequent

4 hour windows in a day).

the process of

responding to your last request to change provisioned throughput. Use the

management

console or the DescribeTables API to check the status of your table.

If the status is “CREATING”,

“DELETING”, or “UPDATING”, you won’t be able to

adjust the throughput of your table. Please wait until

you have a table in

“ACTIVE” status and try again.

strongly consistent

and eventually consistent reads. If you request “1,000 read capacity units”,

DynamoDB

will allocate sufficient resources to achieve 1,000 strongly

consistent reads per second of items up to

4KB. If you want to achieve 1,000

eventually consistent reads of items up to 4KB, you will need half of

that

capacity, i.e., 500 read capacity units. For additional guidance on choosing

the appropriate throughput

rate for your table, see our provisioned throughput

guide.

Yes. For a given allocation of resources, the

read-rate that a DynamoDB table can achieve does depend

on the size of an item.

When you specify the provisioned read throughput you would like to achieve,

DynamoDB provisions its resources on the assumption that items will be less

than 4KB in size. Every

increase of up to 4KB will linearly increase the

resources you need to achieve the same throughput rate.

For example, if you

have provisioned a DynamoDB table with 100 units of read capacity, that means that

it

can handle 100 4KB reads per second, or 50 8KB reads per second, or 25 16KB

reads per second, and

so on.

Similarly the write-rate that a DynamoDB table

can achieve does depend on the size of an item. When you

specify the

provisioned write throughput you would like to achieve, DynamoDB provisions its

resources on

the assumption that items will be less than 1KB in size. Every

increase of up to 1KB will linearly increase

the resources you need to achieve

the same throughput rate. For example, if you have provisioned a

DynamoDB table

with 100 units of write capacity, that means that it can handle 100 1KB writes

per second,

or 50 2KB writes per second, or 25 4KB writes per second, and so

on.

throughput

guide.

capacity allows,

requests above your provisioned capacity will be throttled and you will receive

400 error

codes. For instance, if you had asked for 1,000 write capacity units

and try to do 1,500 writes/second of

1 KB items, DynamoDB will only allow 1,000

writes/second to go through and you will receive error code

400 on your extra

requests. You should use CloudWatch to monitor your request rate to ensure that

you

always have enough provisioned throughput to achieve the request rate that

you need.

on this metric so that

you will be notified if you get close to your provisioned capacity.

increases in throughput

will typically take anywhere from a few minutes to a few hours.

same time when that extra throughput is

needed. We recommend provisioning throughput capacity

sufficiently far in

advance to ensure that it is there when you need it.

capacity in

exchange for:

Reserved Capacity applies within a single AWS

Region and can be purchased with 1-year or 3-year terms.

Every DynamoDB table

has provisioned throughput capacity associated with it, whether managed by Auto

Scaling or provisioned manually when you create or update a table. This

capacity is what determines the

read and write throughput rate that your

DynamoDB table can achieve. Reserved Capacity is a billing

arrangement and has

no direct impact on the performance or capacity of your DynamoDB tables. For

example, if you buy 100 write capacity units of Reserved Capacity, you have

agreed to pay for that much

capacity for the duration of the agreement (1 or 3

years) in exchange for discounted pricing.

Capacity”. This will take you to the

"Reserved Capacity Usage" page. Click on "Purchase Reserved

Capacity" and this will bring up a form you can fill out to purchase

Reserved Capacity. Make sure you

have selected the AWS Region in which your

Reserved Capacity will be used. After you have finished

purchasing Reserved Capacity,

you will see purchase you made on the "Reserved Capacity Usage" page.

continue to pay for every

hour during your Reserved Capacity term regardless of your usage.

discounted rate for that

usage level. If you provision more capacity than that minimum level, you will

be

charged at standard rates for the additional capacity.

units of Reserved

Capacity and you have provisioned 300, then your Reserved Capacity purchase will

automatically cover the cost of 100 write capacity units and you will pay

standard rates for the remaining

200 write capacity units.

capacity, for the

duration of the term of the agreement, in exchange for discounted pricing. If

you use less

than your Reserved Capacity, you will still be charged each month

for that minimum amount of provisioned

throughput capacity.

purchased your Reserved

Capacity. For example, if you purchased 5,000 write capacity units of Reserved

Capacity, then you can apply that to one table with 5,000 write capacity units,

or 100 tables with 50 write

capacity units, or 1,000 tables with 5 write

capacity units, etc.

either at the Payer Account level or

Linked Account level are shared with all accounts connected to the

Payer

Account. Reserved capacity will first be applied to the account which purchased

it and then any

unused capacity will be applied to other linked accounts.

Q: What is a DynamoDB cross-region replication?

DynamoDB table (called

master table) in one or more AWS regions. After you enable cross-region

replication for a table, identical copies of the table are created in other AWS

regions. Writes to the

table will be automatically propagated to all replicas.

using DynamoDB tables from another region in case a data center failure

occurs.

DynamoDB table from the closest AWS data center.

thereby consume less read capacity in the master table.

be a master, you migrate your application to that region more

easily.

of the table from the source region in the destination region.

When the tables are in sync, you can switch

your application to write to the

destination region.

and one or more

replica tables.

Active.

reader is

created for each replica table and copies data from the master table, keeping

the replicas in sync.

Q: How much does it cost to set up cross-region

replication for a table?

While there is no additional charge for the

cross-region replication library, you pay the usual prices for

the following

resources used by the process. You will be billed for:

on the instance type you choose and the region hosting the instances.

Q: In which region does the Amazon EC2 instance

hosting the cross-region replication run?

the cross-region

replication application was originally launched. You will be charged the

instance price in

this region.

change?

DynamoDB

Cross-region Replication.

cally fail over to

another instance. The application underneath uses the Kinesis Client Library

(KCL), which

checkpoints the copy. In case of an instance failure, the

application knows to find the checkpoint and

resume from there.

the read

replica is being created. The bootstrapping uses the Scan operation to copy

from the source

table. We recommend that the table is provisioned with

sufficient read capacity units to support the Scan

operation.

provisioned

capacity of the master table and replica table. The time to propagate an

item-level change on

the master table to the replica table depends on the

provisioned capacity on the master and replica tables,

and the size of the

Amazon EC2 instance running the replication application.

also update?

not result in an

update in throughput capacity on the replica table.

master table will NOT

be automatically created on the replica table. The replication application will

not

propagate changes made on secondary indices on the master table to replica

tables. You will have to

add/update/delete indexes on each of the replica

tables through the AWS Management Console as you

would with regular DynamoDB

tables.

master table to

ensure that it has enough capacity to handle all incoming writes. You can set

the

provisioned read capacity of your replica table at whatever level is

appropriate for your application.

it has been accepted by

the master table. The write will then be propagated to each replica. This means

that there will be a slight delay before a write has been propagated to all

replica tables.

the replication

group and navigating to the Monitoring tab. Metrics on throughput and number of

record

processed are available, and you can monitor for any discrepancies in

the throughput of the master and

replica tables.

same region.

delete the DynamoDB metadata table.

DynamoDB

Triggers

on a DynamoDB table.

You can specify the custom action in code.

include sending notifications,

updating an aggregate table, and connecting DynamoDB tables to other

data

sources.

trigger for a given

table, you can associate an AWS Lambda function to the stream (via DynamoDB

Streams) on a DynamoDB table. When the table is updated, the updates are

published to DynamoDB

Streams. In turn, AWS Lambda reads the updates from the

associated stream and executes the code in

the function.

the amount of time it takes

for your AWS Lambda function to execute. Learn more about AWS Lambda

pricing here. You are not

charged for the reads that your AWS Lambda function makes to the stream

(via

DynamoDB Streams) associated with the table.

AWS Lambda

console to create an AWS Lambda function and associate it with a stream in

DynamoDB

Streams. For more information, see the AWS Lambda FAQ page.

function to a stream

in DynamoDB Streams. For more information, see the AWS Lambda FAQ page.

Lambda function from the

AWS Lambda console or throughput an AWS Lambda API call. For more

information,

see the AWS Lambda FAQ and documentation page.

Streams. You can do this from

the DynamoDB console. In the table for which the stream is enabled,

choose the

stream, choose the Associate Lambda Function button, and then choose the

function that

you want to use for the DynamoDB trigger from the list of Lambda

functions.

the last 24 hours.

You can access a stream with a simple API call and use it to keep other data

stores

up-to-date with the latest changes to DynamoDB or to take actions based

on the changes made to your table.

and after items are

changed. This can be used to build creative extensions to your applications

built on top

of DynamoDB. For example, a developer building a global

multi-player game using DynamoDB can use

the DynamoDB Streams APIs to build a

multi-master topology and keep the masters in sync by

consuming the DynamoDB

Streams for each master and replaying the updates in the remote masters.

As

another example, developers can use the DynamoDB Streams APIs to build mobile

applications that

automatically notify the mobile devices of all friends in a

circle as soon as a user uploads a new selfie.

Developers could also use

DynamoDB Streams to keep data warehousing tools, such as

Amazon Redshift, in

sync with all changes to their DynamoDB table to enable real-time analytics.

DynamoDB also integrates with Elasticsearch using the Amazon DynamoDB Logstash

Plugin, thus

enabling developers to add free-text search for DynamoDB content.

existing DynamoDB table,

select the table through the AWS Management Console, choose the Overview

tab,

click the Manage Stream button, choose a view type, and then click Enable.

your table, and then choose

the Overview tab. Under Stream details, verify Stream enabled is set to Yes.

DynamoDB SDK or using the

Kinesis Client Library (KCL). KCL helps you consume and process the data

from a

stream and also helps you manage tasks such as load balancing across multiple

readers,

responding to instance failures, and checkpointing processed records.

may appear in

DynamoDB Streams in a different order than they were received.

item in the table

represents an individual player. If you make the following three updates in

this order:

Update 1 and Update 3 both changed the same

item (Player 1), so DynamoDB Streams will show you that

Update 3 came after

Update 1. This allows you to retrieve the most up-to-date high score for each

player.

The stream might not show that all three updates were made in the same

order (i.e., that Update 2

happened after Update 1 and before Update 3), but

updates to each individual player’s record will be in

the right order.

the traffic to

your DynamoDB table, DynamoDB will automatically adjust the capacity of the

stream to allow

it to continue to accept all updates.

write capacity of

your DynamoDB table. For example, if you have provisioned enough capacity to

update

1,000 items per second in your DynamoDB table, you could read up to

2,000 updates per second from

your stream.

read the last updates

that were made to your table. After 24 hours, the stream will be deleted

automatically

from DynamoDB Streams.

additional changes

made to your DynamoDB table.

any additional changes

made to your DynamoDB table. If you turn DynamoDB Streams back on, this will

create a new stream in DynamoDB Streams that contains the changes made to your

DynamoDB table

starting from the time that the new stream was created.

once in the stream.

The stream also

includes the change type (INSERT, REMOVE, and MODIFY) and the primary key for

the

item that changed.

information you want to

include in the stream.

For an existing table, use the UpdateTable API call and specify the ViewType

parameter to choose what

information to include in the stream.

{ KEYS_ONLY,

NEW_IMAGE,

OLD_IMAGE,

NEW_AND_OLD_IMAGES}

}

included in the

stream.

update

are included in the stream.

Q:

Can I use my Kinesis Client Library to access DynamoDB Streams?

You can use the DynamoDB

Streams Adapter, which implements the Amazon Kinesis interface, to allow

your

application to use the Amazon Kinesis Client Libraries (KCL) to access DynamoDB

Streams. For

more information about using the KCL to access DynamoDB Streams,

please see our documentation.

the stream and

create a new one using the UpdateTable API.

stream?

deletion, insertion

of a new item, or a modification to an existing item. For more information on

the type of

update, see our documentation.

ENABLED, all updates

to your table will be represented in the stream.

updates to the

table until the status changes to ENABLED.

and big data

analytics. Logstash is an open source data pipeline that works together with

Elasticsearch to

help you process logs and other event data. The Amazon

DynamoDB Logstash Plugin make is easy to

integrate DynamoDB tables with

Elasticsearch clusters.

more about installing and running the plugin.

The DynamoDB Storage Backend for Titan is a

plug-in that allows you to use DynamoDB as the underlying

storage layer for

Titan graph database. It is a client side solution that implements index free

adjacency for

fast graph traversals on top of DynamoDB.

edges can have

properties stored as key-value pairs.

database can be

traversed along specific edge types, or across the entire graph. Graph

databases can

represent how entities relate by using actions, ownership,

parentage, and so on.

a graph database

is a natural choice. Therefore, graph databases are useful for modeling and

querying

social networks, business relationships, dependencies, shipping

movements, and more.

Storage Backend for

Titan, using the CloudFormation templates referred to in this documentation page.

You can also clone the project from the GitHub repository and start by

following the Marvel and

Graph-Of-The-Gods tutorials on your own computer by

following the instructions in the documentation here.

When you’re ready to expand your testing or run in production, you can switch

the backend to use the

DynamoDB service. Please see the AWS documentation for

further guidance.

workloads without

having to manage your own cluster for graph storage.

However, the plugin does not

do provisioning and managing of the client side. For simple provisioning

of

Titan we have developed a CloudFormation template that sets up DynamoDB Storage

Backend for

Titan with Gremlin Server; see the instructions available here.

using DynamoDB as the

storage backend for a Titan graph workload.

you can switch from a

different storage backend to DynamoDB with minimal changes to your application.